How to Install Ollama on Windows, Mac, and Linux

-

Penulis : adityo

- Tanggal :

- Tag : Ollama

If you’re curious about trying open-source AI, Ollama is one of the easiest tools to get started with. It lets you run AI models directly on your computer—no cloud subscription required. Before you can use it, you’ll need to install Ollama. Here’s a step-by-step guide for Windows, macOS, and Linux users.

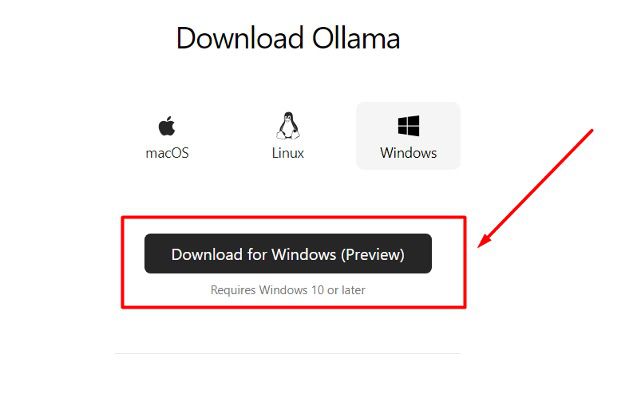

1. Install Ollama on Windows

Installing Ollama on Windows is simple since it comes with an official installer.

Steps:

- Visit the official website: https://ollama.com

- Download the Windows installer (.exe).

- Run the installer and follow the setup instructions.

- Once finished, open Command Prompt or PowerShell.

- Test the installation by running:

Bash

ollama run llama2If it works, you’ll see the model loading and ready to chat.

2. Install Ollama on macOS

Mac users can also install Ollama easily with a dedicated package.

Steps:

- Go to the Ollama official site.

- Download the installer for macOS (.pkg).

- Open the file and follow the standard installation process.

- After installation, open Terminal.

- Try running:

Bash

ollama run mistralIf the model responds, the setup is complete.

3. Install Ollama on Linux

On Linux, installation is done via the terminal using a shell script.

Steps:

- Open your Terminal.

- Run the following command:

Bash

curl -fsSL https://ollama.com/install.sh | sh - Wait until the installation completes.

- Test it with:

Bash

ollama run gemmaIf you see the model running, you’re good to go.

Tips After Installing Ollama

- Check your computer’s specs. Larger models may require more RAM and processing power.

- Start with smaller models (like Mistral or Phi) before trying heavier ones.

- Use

ollama listto see which models are available on your system.

Conclusion

Ollama makes it possible to run AI models locally across Windows, macOS, and Linux. The installation is straightforward, and once it’s set up, you can immediately start experimenting with open-source models.

With Ollama, you get the freedom to learn, explore, and even build AI-powered applications—without paying monthly cloud fees.

Read Also:

Adityo GW

I am currently working as a freelancer, involved in several website projects and server maintenance tasks.